About me

Projects

Curriculum Vitae

LinkedIn

GitHub

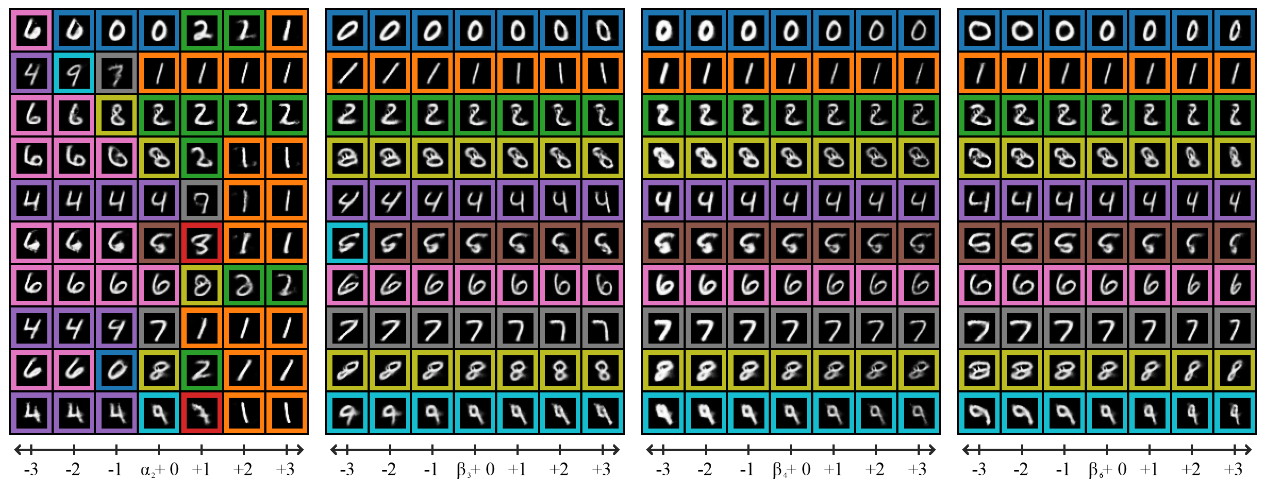

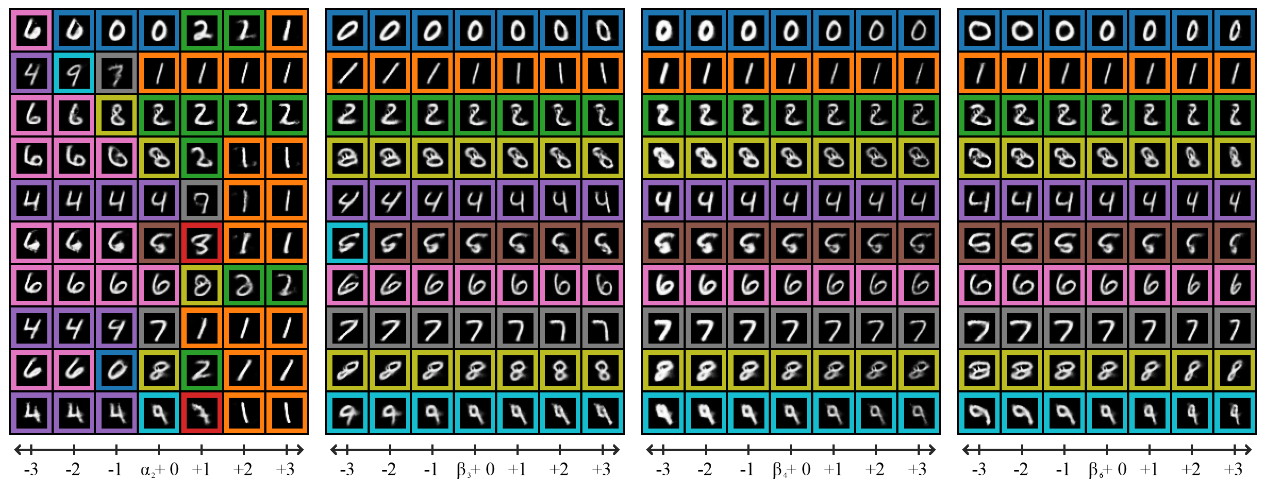

The hand-written symbols are generated. The coloured border displays the judgement of the classifier.

The left image shows the changes of the parmeter α2, for each row from left to right. Notice how the digits change and classification changes with it. This parameter, therefore, affects a property that defines the digits.

The three images on the right show parameters β3, β4, and β6. Notice how the aesthetics of the digits change (tilt, thickness, and width), but the classification stays the same. These properties do, therefore, not define the digit.

Now we know what the classifier does not take these properties into account.

The hand-written symbols are generated. The coloured border displays the judgement of the classifier.

The left image shows the changes of the parmeter α2, for each row from left to right. Notice how the digits change and classification changes with it. This parameter, therefore, affects a property that defines the digits.

The three images on the right show parameters β3, β4, and β6. Notice how the aesthetics of the digits change (tilt, thickness, and width), but the classification stays the same. These properties do, therefore, not define the digit.

Now we know what the classifier does not take these properties into account.

Fairness, Accountability, Confidentiality and Transparency

Instead of conventional lectures and tests, the course Fairness, Accountability, Confidentiality and Transparency in AI from my Master's was a large project.

In a timespan of four fulltime weeks, we had to choose a scientific paper that covered with either fairness, accountability, confidentiality or transparency in AI and do a reproduction study as part of the

ML Reproducibility Challenge 2020. This means verifying a papers findings by rebuilding their methods, recreating their results and/or creating new experiments using the same

line of thought. Together with the bright Simon Mariani,

Gerson Foks, and

Tomas Fabry we produced the paper

Generative causal explanations of black-box classifiers. In this paper, a framework is created that allows to control

the output of a neural net by adding a number of adaptable parameters that either change the fundamental output or just change the appearance. Imagine a neural net that generates hand-written numbers, such as those in

MNIST dataset. Next, a classifier is trained to read those hand-written digits and classify them.

Let's say the network produces a hand-written number 1. How does one know it is a 1? For example by saying it is a straight line from top to bottom with equal spacing on the left and right hand side.

If we were to make the output more bendy, we would no longer classify it as a 1, but maybe as a 6. This, therefore, is a property that is bound to the definition of a '1'. There are also properties that do not affect the classification

of the digit, such as it's thickness. By using the classifier, the network learns to distinguish these properties into a set of properties that define the digit and a set of properties that do not. This is an attempt to make AI more

explainable, where we can figure out how each parameter affects the network, it's output and the judgement of the classifier.

Reproduction

Our reproduction consisted out of 3 methods: a complete rebuild of the code base (no code was abailable) using PyTorch Lightning, experiments on

more complex datasets and a completely new experiment. In this experiments, we purposefully

use a poorly performing classifier. As the framework highlights which parameters defining the classification, changing these parameters and observing the results should give is insight why the classifier is

performing poorly. For example, if the classifier is tasked with classifying either hand-written numbers 6 and 1 by only observing the total brightness.

Intuitively, the classifier will learn that brighter images are a 6 and less bright images are a 1.

But let's assume we do not know this. The framework learns to create parameters that change the classification and parameters that only change the aesthetics. By changing the first parameters, the total brightness of the output changes.

The framework has, therefore, correctly figured out what affects the decisions of the classifier, explaining the classifier.

The hand-written symbols are generated. The coloured border displays the judgement of the classifier.

The left image shows the changes of the parmeter α2, for each row from left to right. Notice how the digits change and classification changes with it. This parameter, therefore, affects a property that defines the digits.

The three images on the right show parameters β3, β4, and β6. Notice how the aesthetics of the digits change (tilt, thickness, and width), but the classification stays the same. These properties do, therefore, not define the digit.

Now we know what the classifier does not take these properties into account.

The hand-written symbols are generated. The coloured border displays the judgement of the classifier.

The left image shows the changes of the parmeter α2, for each row from left to right. Notice how the digits change and classification changes with it. This parameter, therefore, affects a property that defines the digits.

The three images on the right show parameters β3, β4, and β6. Notice how the aesthetics of the digits change (tilt, thickness, and width), but the classification stays the same. These properties do, therefore, not define the digit.

Now we know what the classifier does not take these properties into account.